How to Spot P-Hacking: A Case Study

How to Spot P-Hacking

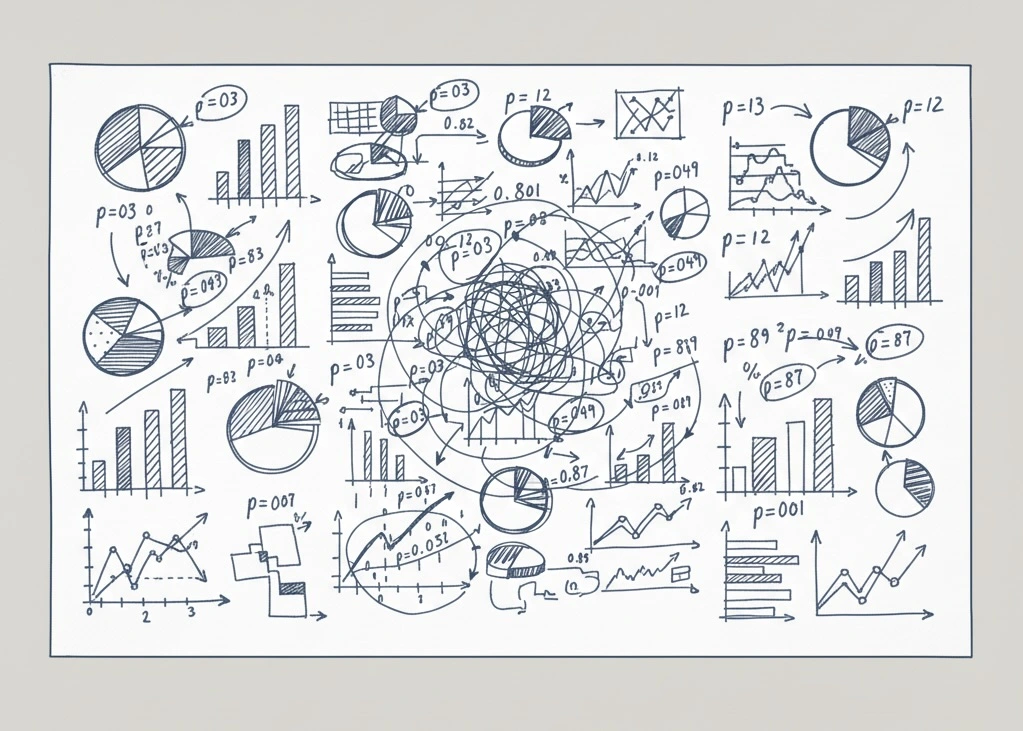

P-hacking is the manipulation of data analysis to find a statistically significant result where none exists. It is cheating.

In science, a p-value below 0.05 is the standard for "significance." Researchers are under pressure to find this number. If they do not, they often cannot publish. So, they "hack" the data until they find it.

The Mechanism: How It Works

Imagine an archer. He shoots arrows at a blank wall. After he shoots, he draws a bullseye around the arrows. He claims he is a perfect shot.

This is p-hacking. The researcher tries many different analyses. They only report the one that worked.

Common tactics include:

- Multiple Comparisons: Testing 20 different variables. One will be significant by chance.

- Stopping Rules: Checking the data every day. Stopping the study the moment p < 0.05.

- Subgroup Analysis: "The drug did not work. But it worked for left-handed men over 50."

Case Study: The Thimerosal Controversy

We can see this in action. Consider the paper "An assessment of the impact of thimerosal on childhood neurodevelopmental disorders" by Geier & Geier.

This paper claimed vaccines cause autism. It has been widely debunked. But how did they get "significant" results? They p-hacked.

Red Flag 1: The "Garden of Forking Paths"

They used the VAERS database. This is a passive reporting system. It is messy. It allows for thousands of possible comparisons.

They could compare different vaccines. Different doses. Different ages. Different outcomes.

When you have 1,000 paths, you will find a "significant" result by accident. They found it. They reported it. They ignored the 999 paths that showed nothing.

Red Flag 2: No Correction

If you test 20 hypotheses, you must adjust your math. You must make the p-value threshold stricter. This is called the Bonferroni correction.

This paper did not do that. They ran many tests. They used the standard p < 0.05 cutoff. This guarantees false positives.

Red Flag 3: Biological Implausibility

P-hacking often produces results that make no sense.

Large, rigorous studies show no link between thimerosal and autism. This study used a weak dataset and found a link. When a weak study contradicts a strong study, trust the strong study. The weak study is likely noise.

The Prescription: The Reader's Checklist

You can spot this. When you read a "breakthrough" study, ask these questions:

- Did they measure many things? If they measured 50 outcomes but only talk about 1, be suspicious.

- Is the p-value borderline? A p-value of 0.049 is suspicious. A p-value of 0.001 is robust.

- Is the sample size small? Small samples fluctuate. They are easy to hack.

At PaperScores, we automate this check. Our Statistical Rigor score penalizes papers that do not correct for multiple comparisons. We flag p-hacking risks automatically.

Science relies on trust. P-hacking breaks that trust. We must stop it.